Overview

Hightouch supports streaming events from Apache Kafka, Confluent Cloud, and Redpanda clusters in real time.

All three are Kafka-compatible platforms:

- Apache Kafka: the open-source distributed event streaming platform.

- Confluent Cloud: a fully managed Kafka-as-a-service offering with enterprise features.

- Redpanda: a high-performance, Kafka API–compatible platform designed as a drop-in replacement.

Since they all speak the Kafka protocol, setup and event ingestion in Hightouch are nearly identical.

Once connected, Hightouch automatically extracts, transforms, and loads your event data into Hightouch Events. Your data can then be synced to your warehouse or streamed to our catalog of real-time destinations.

Setup

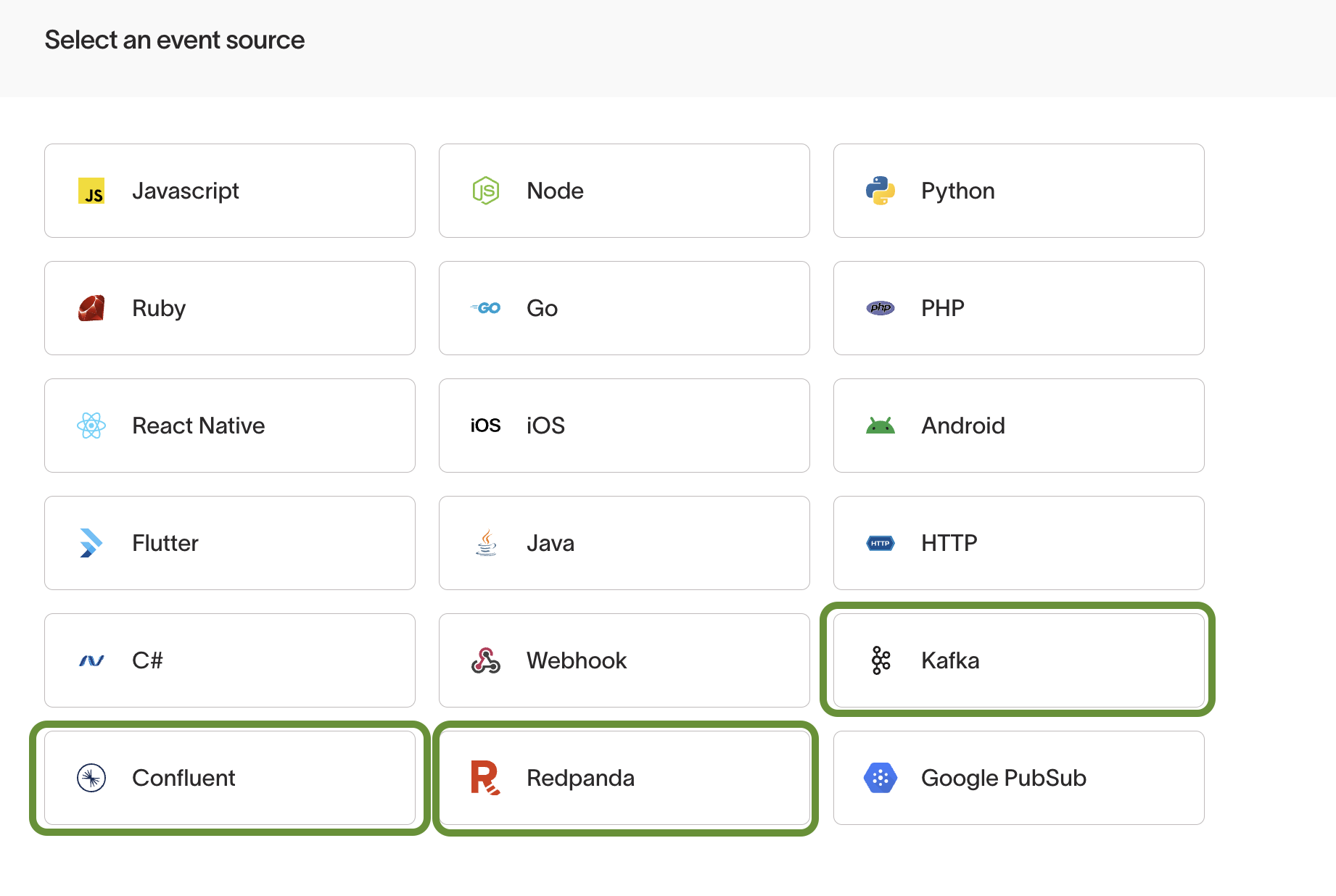

To get started, create an event source and select either:

- Kafka for Apache Kafka

- Confluent for Confluent Cloud

- Redpanda (for Redpanda clusters)

Both sources use the same configuration flow.

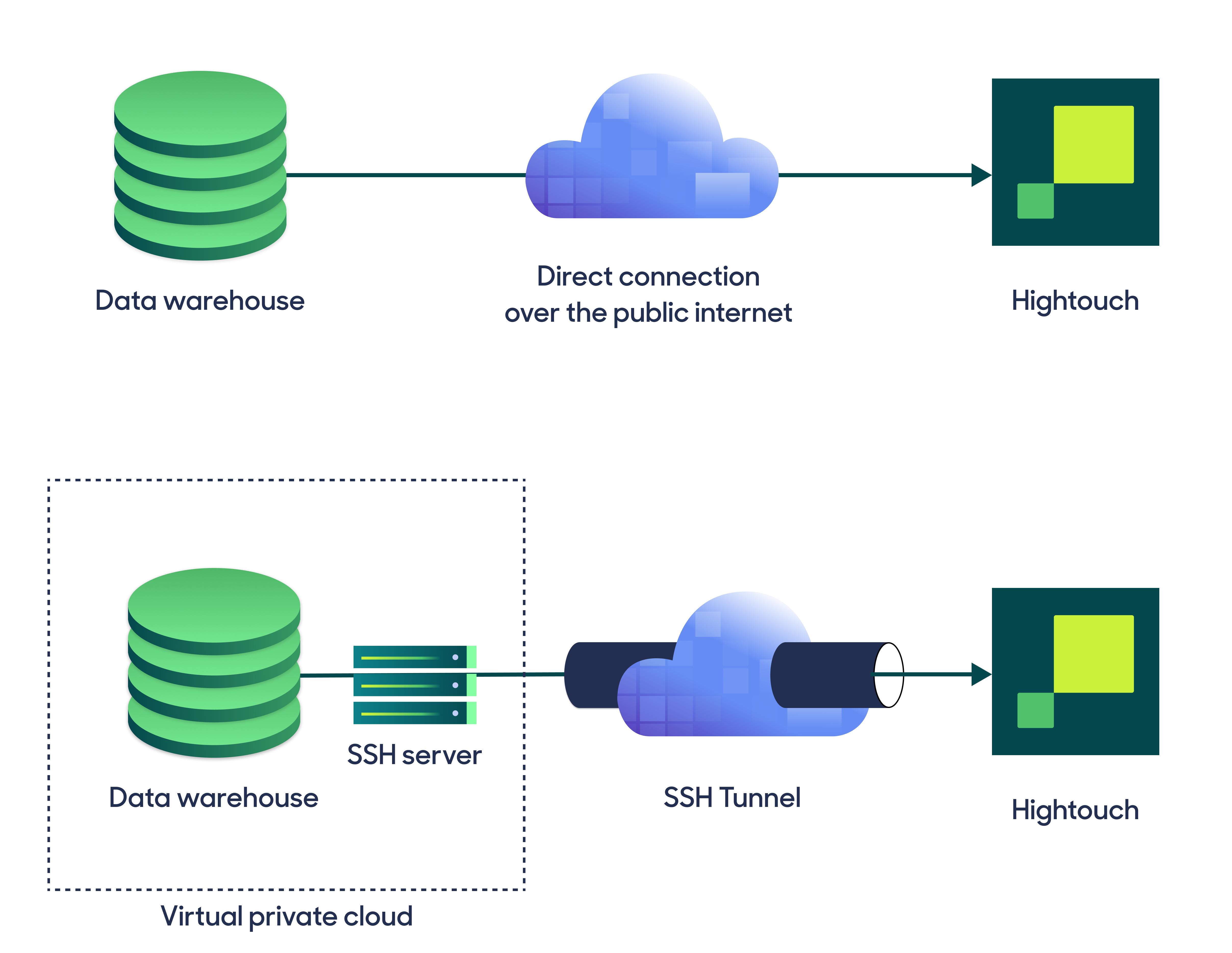

Choose connection type

Hightouch can connect directly to Kafka over the public internet or via an SSH tunnel. Since data is encrypted in transit via TLS, a direct connection is suitable for most use cases. You may need to set up a tunnel if your Kafka instance is on a private network or virtual private cloud (VPC).

Hightouch supports both standard and reverse SSH tunnels. To learn more about SSH tunneling, refer to Hightouch's tunneling documentation.

Configure your source

The required fields are the same across Apache Kafka, Confluent, and Redpanda:

- Broker URL:

- Kafka: hostname:port for your brokers.

- Confluent: provided in your Confluent cluster settings.

- Redpanda: hostname:port for your Redpanda brokers.

- Topic: The topic to consume events from. Messages must be JSON-formatted.

- Authentication:

- Kafka: Multiple auth methods supported.

- Confluent: Use SASL/SSL credentials from Confluent Cloud.

- Redpanda: Uses Kafka-compatible auth methods configured in your cluster.

Message format

Hightouch Events requires Kafka messages be encoded as JSON.

Event Structure

All events from Kafka, Confluent, and Redpanda are ingested into Hightouch as track events.

- Message

key,value, andheaders→properties - Metadata such as

topic,partition, andoffset→context.kafka

For example, the following Kafka message:

{

"partition": 1,

"offset": 123,

"timestamp": 1721659050648,

"key": "k1",

"value": {

"user_id": "user_123",

"amount": 123.45

},

"headers": [

{

"key": "h1",

"value": "abc"

}

]

}

Will be ingested into Hightouch as:

{

"type": "track",

"event": "Kafka Event",

"properties": {

"key": "k1",

"value": {

"user_id": "user_123",

"amount": 123.45

},

"headers": {

"h1": "abc"

}

},

"context": {

"kafka": {

"topic": "my-topic",

"partition": 1,

"offset": "123"

}

},

"timestamp": "2024-07-22T14:52:37.648Z"

}

Field mappings

You can configure how Hightouch transforms messages from your Kafka topic to Hightouch track events. Mappings can be configured for the following fields:

eventuserIdanonymousIdmessageIdtimestamp

You can also use Functions to standardize schemas or enrich event data.

Schema Enforcement

Event Contracts can be attached to Kafka, Confluent, and Redpanda sources just like any other Event Source.

Tips and troubleshooting

If you encounter an error or question not listed below and need assistance, don't hesitate to . We're here to help.